How data drives the value of software testing solutions

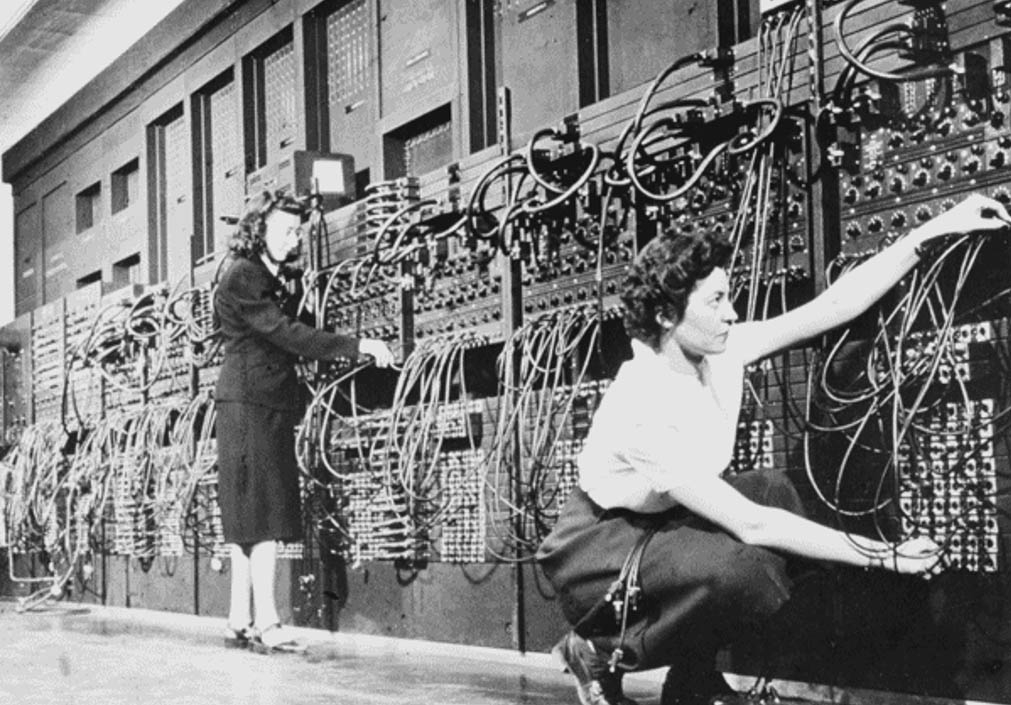

Try to compare several figures. The first computer in the world – ENIAC – was created in the USA and put into operation on February 14, 1946. It weighed 30 tons and consisted of 18,000 vacuum tubes. The computer made 5,000 operations per second. ENIAC had neither its own RAM nor built-in memory.

The computer that drove the first Apollo spacecraft was no less a technological marvel for the early 1960s than ENIAC turned out for the pre-computer era. Today, we can say that the rocket that landed on the Moon and carried the astronauts back to Earth was controlled by a more or less advanced calculator. Here are the brief technical specifications of the space computer.

- Frequency: 2 megahertz.

- RAM: 4 kilobytes

- Read-only memory: 72 kilobytes.

- Performance: 43 thousand operations per second.

Just compare these numbers with the capabilities of your smartphone.

And just a little more statistics.

One stock trader, to get a very modest profit, performs about 40 transactions per second. An extra millisecond spent pondering or entering key numbers can turn a potentially profitable transaction into a disastrous loss. The critical response time for data transmission over fiber-optic cables between New York and Chicago is 12 milliseconds. Any other value is unacceptably low.

It used to be said: "He who owns the information owns the whole world." Indeed, the current amount of data that companies specializing in quality assurance (QA) have to process each second was never dreamed of by the first space explorers.

Now is the time to talk about the place of Big Data in the software testing process.

The concentrated time and a clear future

It seems, just yesterday a fast computer and a competent programmer were enough to test new software. Today, the work of a competitive software testing agency is unthinkable without the widest use of the capabilities of artificial intelligence and enormous computing power for billions of calculations per second.

Time is becoming alarmingly shrinking. Now not minutes, but milliseconds are becoming critical. At the same time, thanks to new methods of processing immense arrays of the most complex and diverse data, we get the opportunity not only to predict but to clearly see the future.

It would be strange if these endless possibilities were not used to develop new software testing solutions.

The process of analyzing Big Data includes many subtle definitions. Sometimes even experts are confused when trying to quickly explain them. So it makes sense for everyone interested in details to read special publications devoted to this particular topic.

We will simply list the main types of data analysis that any reliable and competitive software testing agency necessarily uses in its complex quality assurance (QA) approach.

Here are they:

- Descriptive analytics;

- Diagnostic analytics;

- Predictive analytics:

- Prescriptive analytics.

The easiest way to explain the scope of this or that item from this list is with specific examples.

For example, Owens Corning, a turbine blade company, has used predictive analytics to reduce the time required to test any new material for its products from ten days to two hours.

La-Z-Boy used data analytics to optimize the business, including finance, logistics, cargo insurance, and hiring, aspects of furniture retail across 20 locations around the world.

So, where are the predictions of the future?

From the above, it seems clear that Big Data is an invaluable and bottomless source of information about anything. The deal is just in your ability to ask questions correctly. And it is precisely in the software testing process that the predictive capabilities of data analysis are revealed, perhaps, most vividly.

Judge for yourself using predictive analysis (PA), quality assurance companies get the following opportunities:

- By studying the error logs, predict where, after their elimination, new bottlenecks may arise and promptly fix the bugs that could only appear.

- By researching social networks, study the preferences, expectations, and reactions of users. Moreover, such an analysis makes it possible to predict those trends that will just emerge over time. This allows developers to adjust their strategic plans ahead of time.

- Remember the example with turbine blades? Building an optimal testing solution allows manufacturers to reduce to a minimum the time spent on developing new programs and improving existing ones in the same way.

The format of this article does not allow dwelling in detail on the exciting particularities of each of the mentioned methods of data analysis and specific methods of using the results in the practice of software testing agencies. As well as providing a comprehensive explanation, such as the difference between key concepts such as Quantitative Data and Qualitative Data, etc. The purpose of this publication is only to indicate general trends that appear at the intersection of several sciences and their refraction in the practice of successful companies. However, knock, and it will be open to you: find a competent software testing blog, read what the experts-practitioners have to say on the topic of your interest, and very soon you will start speaking the same language with them.